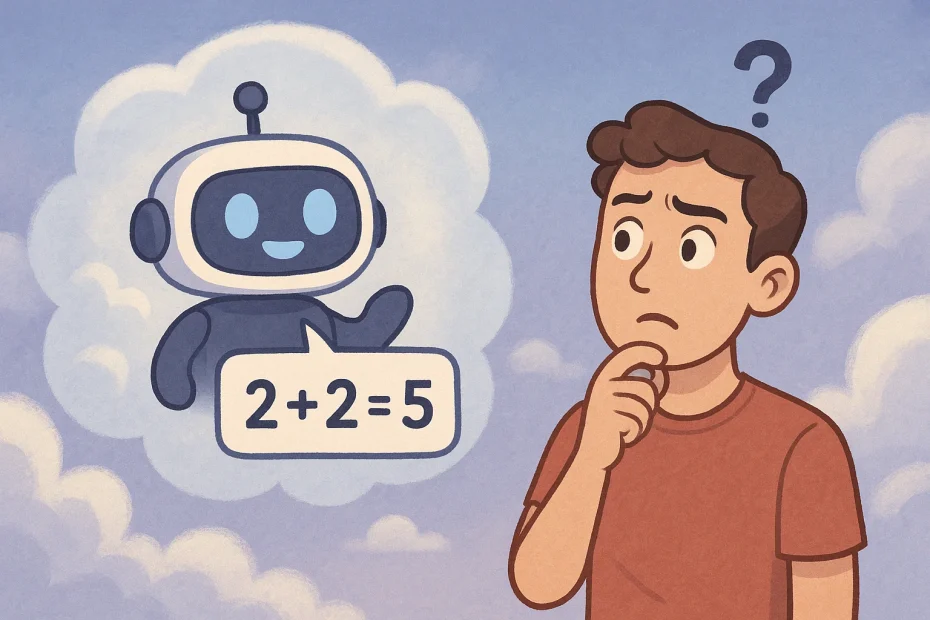

AI is one of the most exciting tools we have today—but it’s not perfect. If you’ve ever asked ChatGPT a question and gotten an answer that sounded confident but was completely wrong, you’ve experienced what’s called an AI hallucination.

It’s a strange term, but it’s an important one to understand. Because as amazing as AI can be, knowing when (and why) it gets things wrong is key to using it safely and smartly.

What Are AI Hallucinations?

In simple terms, an AI hallucination is when the AI “makes something up” and presents it as fact. It’s not trying to lie—these systems don’t know truth from falsehood the way people do. They’re just predicting what words should come next based on patterns in data.

So when you ask a question, the AI tries to generate a helpful-sounding response. And most of the time, it gets pretty close. But sometimes, it fills in gaps with information that sounds right… but isn’t.

Some Common Examples:

- Quoting fake studies or articles

- Inventing names of experts or books

- Giving historical facts that are slightly off (or completely made up)

The result? You might get an answer that feels trustworthy—until you double-check it and realize it’s not grounded in reality.

Why Do Hallucinations Happen?

AI models like ChatGPT don’t understand facts the way people do. They aren’t pulling answers from a database of verified information. Instead, they generate text based on statistical patterns in their training data.

That means:

- If a topic has conflicting or incomplete data, the AI might guess

- If it’s never seen the specific info before, it may improvise

- It’s better at sounding confident than being correct

That’s why you’ll sometimes get an answer that looks polished, but isn’t grounded in truth.

How to Spot a Hallucination

It can be tricky, especially if the response seems well-written. But here are a few signs to watch for:

- It gives a lot of details very quickly, especially names, dates, or numbers

- There are no sources or links—and if you Google them, nothing comes up

- You feel a little too impressed by how “perfect” the answer sounds

If something seems off, it probably is. And if it’s something important—like medical info, legal advice, or financial guidance—always double-check it with a real human expert or trusted source.

So... Should You Still Use AI?

Absolutely. But with the same caution you’d use when talking to a super-smart friend who sometimes makes things up.

AI is amazing for:

- Brainstorming ideas

- Summarizing content

- Rewriting or improving your writing

- Explaining things in simple terms

But it’s not always a fact-checking machine. That’s where your own judgment (and a quick Google search) come in.

The Bottom Line

The truth about AI hallucinations is that they’re not a sign of failure—they’re just part of how these tools work right now. And the more you understand them, the more confidently you can use AI in your daily life.

If you're just starting out, take a look at our guide on daily AI use. The more you play around with it, the more natural it becomes to tell the difference between helpful output and... well, a digital daydream. You can read more about AI hallucinations here.

You're not expected to trust AI blindly—you're expected to explore, learn, and ask questions. And you're doing exactly that just by reading this.